Section: New Results

Trajectory Clustering for Activity Learning

Participants : Jose Luis Patino, Guido Pusiol, Hervé Falciani, Nedra Nefzi, François Brémond, Monique Thonnat.

The discovery, in an unsupervised manner, of significant activities observed from a video sequence, and its activity model learning, are of central importance to build up on a reliable activity recognition system. We have deepened our studies on activity extraction employing trajectory information. In previous work we have shown that rich descriptors can be derived from trajectories; they help us to analize the scene occupancy and its topology and also to identify activities [67] , [68] , [70] , [55] . Our new results show how trajectory information can be more precisely employed, alone or in combination with other features for the extraction of activity patterns. Three application domains are currently being explored: 1) Monitoring of elderly people at home; 2) Monitoring the ground activities at an airport dock-station (COFRIEND project (http://www-sop.inria.fr/pulsar/personnel/Guido.Pusiol/Home4/index.php )); 3)Monitoring activities in subway/street surveillance systems.

Monitoring of elderly people at home

We propose a novel framework to understand daily activities in home-care applications; the framework is capable of discovering, modeling and recognizing long-term activities (e.g. “Cooking”, “Eating”) occurring in unstructured scenes (i.e. “an apartment”).

The framework links visual information (i.e., tracked objects) to the discovery and recognition of activities by constructing an intermediate layer of primitive events automatically.

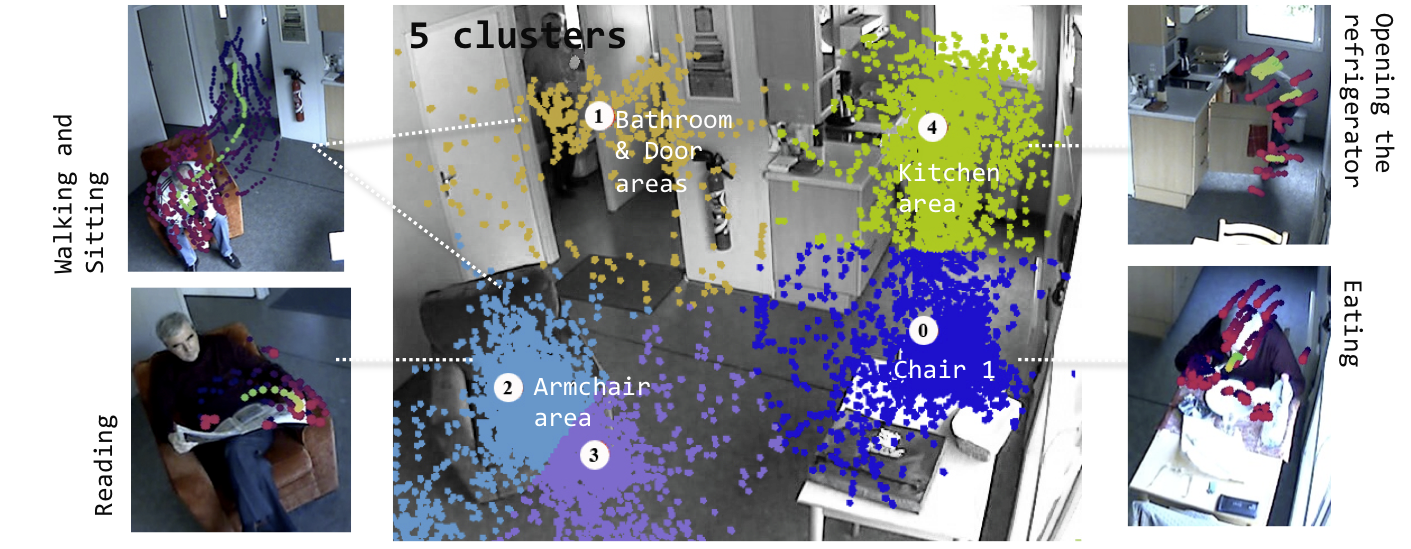

The primitive events characterize the global spatial movements of a person in the scene (“in the kitchen”), and also the local movements of the person body parts (“opening the oven”). The primitive events are built from interesting regions, which are learned at multiple semantic resolutions (e.g. the “oven” is inside the “kitchen”). An example of the regions and possible activities for a single resolution is displayed in Fig. 22 .

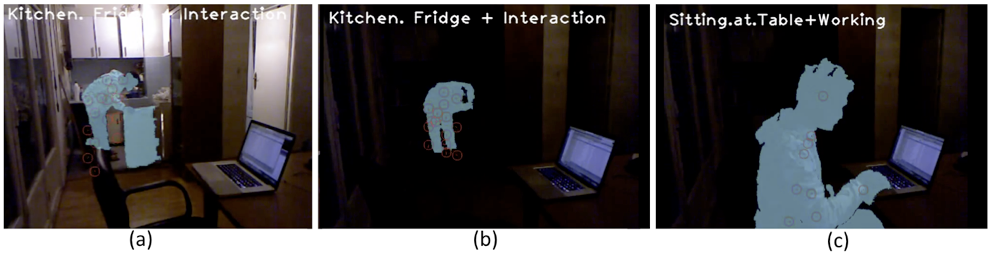

A probabilistic model is learned to characterize each discovered activity. The modeled activities are automatically recognized in new unseen videos where a pop-up with a semantic description appears when an activity is detected. Examples of semantic labels are illustrated in Fig. 23 (a, b, c).

Recently we introduced 3D (MS. Kinect) information to the system. The preliminary results show an improvement superior to the 30% of the recognition quality. Also, the system can recognize activities in challenging situations as the lack of light. -See Fig.2 (b) and Fig.2 (c) -.

The approach can be used to recognize most of the interesting activities in a home-care application and has been published in [43] . Other examples and applications are available online in http://www-sop.inria.fr/pulsar/personnel/Guido.Pusiol/Home4/index.php .

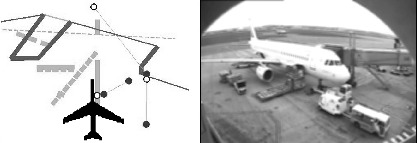

Monitoring the ground activities at an airport dock-station

The COFRIEND project aims at creating a system for the recognition and interpretation of human activities and behaviours at an airport dock-station. Our contribution is a novel approach for discovering, in a unsupervised manner, the significant activities from observed videos. Spatial and temporal properties from detected mobile objects are modeled employing soft computing relations, that is, spatio-temporal relations graded with different strengths. Our system works off-line and is composed of three modules: The trajectory speed analysis module, The trajectory clustering module, and the activity analysis module. The first module is aimed at segmenting the trajectory into segments of fairly similar speed (tracklets). The second aims at obtaining behavioural displacement patterns indicating the origin and destination of mobile objects observed in the scene. We achieve this by clustering the mobile tracklets and also by discovering the topology of the scene. The latter module aims at extracting more complex patterns of activity, which include spatial information (coming from the trajectory analysis) and temporal information related to the interactions of mobiles observed in the scene, either between themselves or with contextual elements of the scene. A clustering algorithm based on the transitive closure calculation of the final relation allows finding spatio-temporal patterns of activity. An example of discovery is given in the figure below. This approach has been applied to a database containing near to 25 hours of recording of dock-station monitoring at the Toulouse airport. The discovered activities are: `GPU positioning', `Handler deposits chocks', `Frontal unloading operation', `Frontal loading operation', `Rear loading operation', `Push back vehicle positioning'. An example of discovered activity (Frontal loading) is given on the figure 24 . When comparing our results with explicit ground-truth given by a domain expert, we were able to identify the events in general with a temporal overlap of at least 50%. The comparison with a supervised method on the same data indicates that our approach is able to extract the interesting activities signalled in the ground-truth with a higher True Positive Rate (74% TPR for the supervised approach against 80% TPR with our unsupervised method). This work has been published in [42]

|

Monitoring activities in subway/street surveillance systems

In this work we have built a system to extract from video and in an unsupervised manner the main activities that can be observed from the a subway scene. We have setup a processing chain broadly working on three steps: The system starts in a first step by the unsupervised learning of the main activity areas of the scene. In a second step, mobile objects are then characterized in relation to the learned activity areas: either as `staying in a given activity zone' or `transferring from an activity zone to another' or a sequence of the previous two behaviours if the tracking persists long enough. In a third step we employ a high-level relational clustering algorithm to group mobiles according to their behaviours and discover other characteristics from mobile objects which are strongly correlated. We have applied this algorithm to two domains. First, monitoring two hours of activities in the hall entrance of an underground station and showed what are the most active areas of the scene and how rare/abnormal (going to low occupied activity zones) and frequent activities (e.g. buying tickets) are characterized. In the second application, monitoring one hour of a bus street lane, we were able again to learn the topology of the scene and separate normal from abnormal activities. When comparing with the available ground-truth for this application, we obtained a high recall measure (0.93) with an acceptable precision (0.65). This precision value is mostly due to the different levels of abstraction between the discovered activities and the gound-truth. The incremental learning procedure employed in this work is published in [52] while the full activity extraction approach was published in [41]